ANNIE V2: Diffusion-Inspired Cognition for a “Self-Thinking” Local LLM

Published on:

ANNIE V2 started as a simple question: what if an LLM wasn’t just a chat box— what if it had a running internal state, could keep thinking in the background, and could build long-term memory the way people do?

The goal wasn’t “claim AGI.” The goal was to prototype the mechanics that make cognition feel real: memory retrieval, a compact belief state, intrinsic motivation signals, and iterative refinement loops— all while staying local-first and observable.

Inside AnnieV2 Chat InterFace — Screenshots

Chat view with the warming/online status indicator and navigation to Memory, Thinkers, and Code Review.

What ANNIE V2 Does

• Local chat with persistent memory: conversations are stored and can be recalled by semantic similarity, not just keywords.

• Belief state: a compact “working model” of goals + constraints that updates over time in the background.

• Background Thinker: an always-on loop that keeps exploring your goal space, generating “ticks” and pivots as it learns.

• Latent Future / forecasting mode: structured scenario thinking where the system organizes possibilities into actionable paths.

• Self-review signals: the system can surface internal notes (e.g., what it’s uncertain about, what it’s trying next) without dumping raw chain-of-thought.

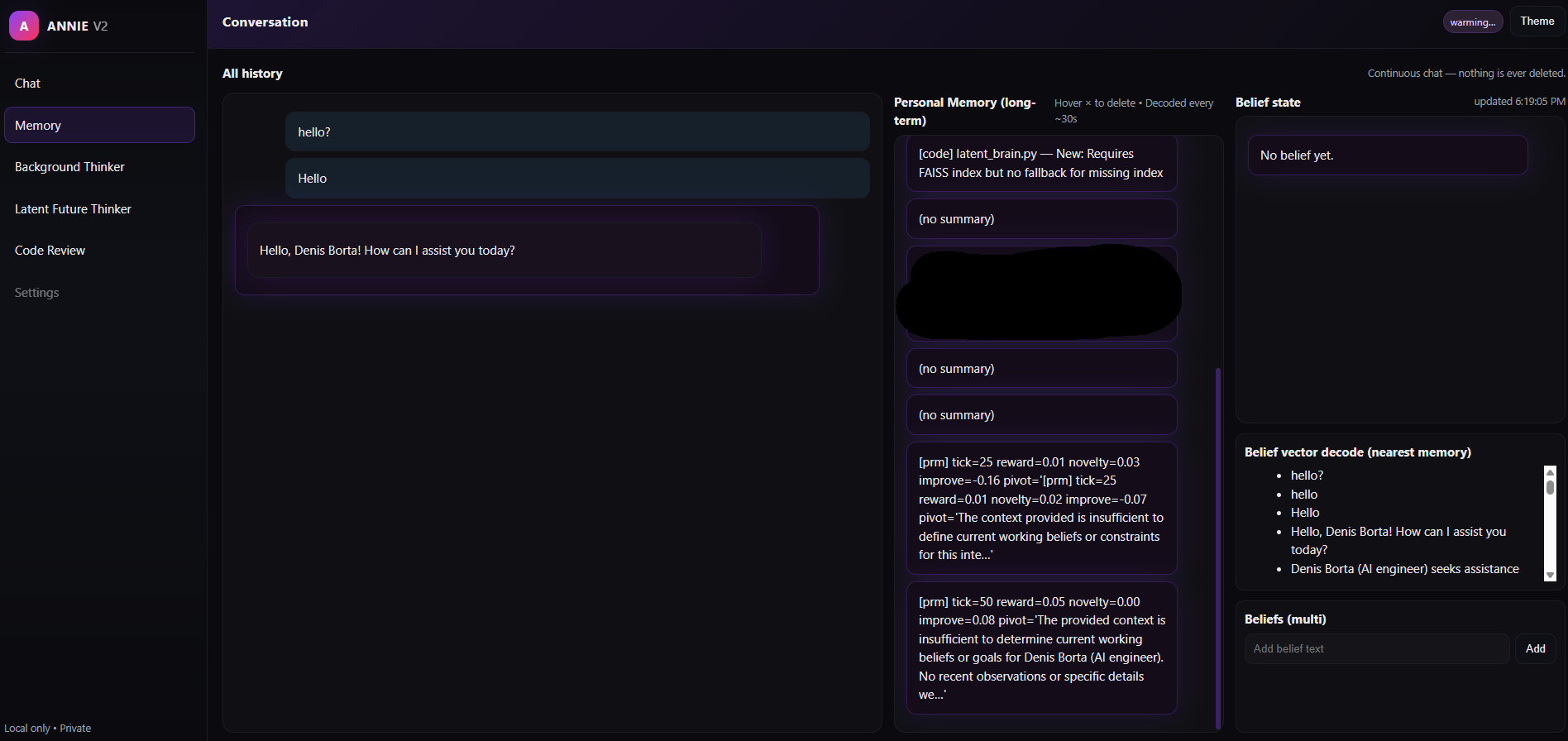

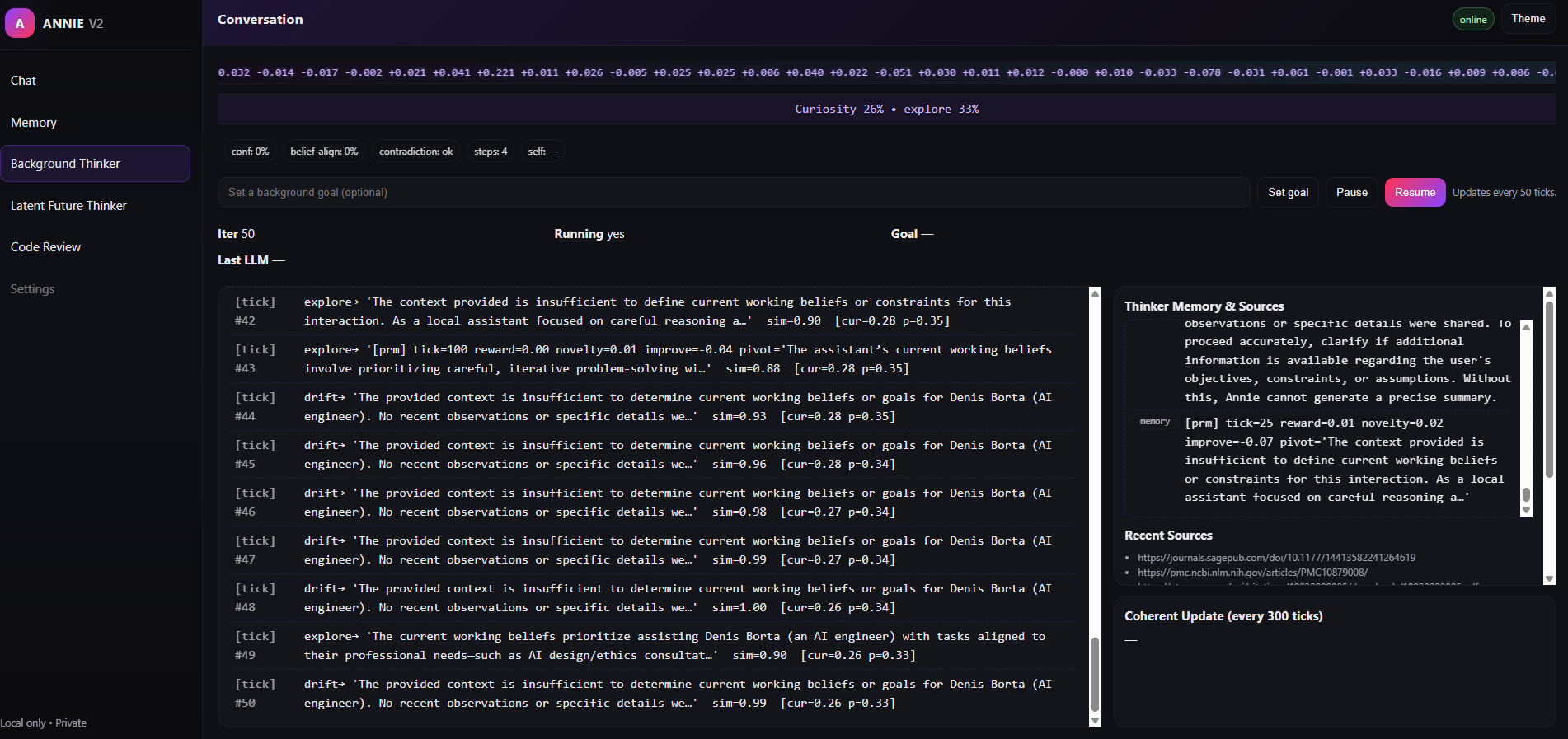

Inside AnnieV2 logistics — Screenshots

Memory timeline + long-term personal memory feed, with belief state, belief-vector decode (nearest memory), and optional manual belief injection.

Background Thinker loop: tick stream, curiosity/explore rates, and the active memory + sources panel.

How It Works (High Level)

ANNIE V2 is built around a small set of “cognitive primitives” that cooperate:

1) Encode experience — Every new message becomes an embedding and is added to a vector index so it can be recalled later.

2) Retrieve context — Instead of stuffing the whole chat into a prompt, the system searches memory for the most relevant anchors.

3) Update beliefs — Periodically, ANNIE generates a short belief summary (private, compact) and stores a latent vector representation.

4) Keep thinking — A background loop continues to “walk” memory, track novelty vs progress, and steer exploration toward what matters.

5) Answer cleanly — The user only sees the final response, while internal work stays internal.

The result is a system that behaves less like a one-shot chatbot and more like a tiny workspace: memory, state, and direction keep evolving even between messages.

The Diffusion Process (Planning in Latent Space)

One of the more experimental parts of ANNIE V2 was a diffusion-inspired approach to planning. The idea was to treat a “plan” not as text at first, but as a sequence of latent vectors—a rough internal trajectory.

Conceptually, it looks like this:

• Start from noise (an unformed internal trajectory).

• Condition that trajectory on a blended signal: the current goal, the belief vector, and retrieved memory anchors.

• Run an iterative denoise process that nudges the latent trajectory toward coherence over multiple steps.

• Finally, decode each latent step by mapping it back to human-readable text using nearest-neighbor retrieval from memory.

In plain English: ANNIE tries to “dream” a plan into existence by repeatedly refining an internal representation—then translating that internal structure into a compact set of actionable steps.

Why This Was “AGI-Inspired” (Without the Hype)

ANNIE V2 was never positioned as AGI. It was a sandbox for testing whether adding a few cognitive components makes an LLM feel more like an agent:

• Continuity — memory that persists, not just context windows.

• Self-modeling — belief summaries that compress goals and constraints into a usable internal state.

• Intrinsic motivation — curiosity signals that sometimes explore instead of always exploiting the top memory hit.

• Iterative refinement — background loops that keep improving a direction rather than stopping at one answer.

• Observability — a UI that exposes what’s happening (ticks, pivots, state) so the system isn’t a black box.

Where This Goes Next

ANNIE V2 proved the architecture: long-term memory, background cognition, and diffusion-inspired planning can live together in a local-first system. The next step is simplifying and repurposing these primitives into a single sharp end product— where “self-thinking” isn’t a demo feature, but a practical advantage.